Generative AI is an effective new resource. It is able to write stories, draw pictures and develop new ideas. Companies desire to install these smart features into their applications and websites. Nevertheless, this new power has a huge problem of ensuring secrets of people are kept safe.

Generative AI functions on the basis of analyzing voluminous data. Should that information hold your personal messages, photographs or business agenda, they might be in the thoughts of the AI. The privacy-first must be put forward by the people who create such smart means. This implies ensuring that secrets of the user are secured at the initial step.

We are going to examine three primary ways in which builders could ensure that their new AI tools keep your secrets safe.

1. Keep the Secrets at Home

It is the safest method of keeping a secret, never to make it out of your house. The same is true for data. Most generative AI applications are powered by sending your command to a large server distant. Thinking is done and the answer is sent by that server. Your data may be exposed or saved by other people when it is moved around.

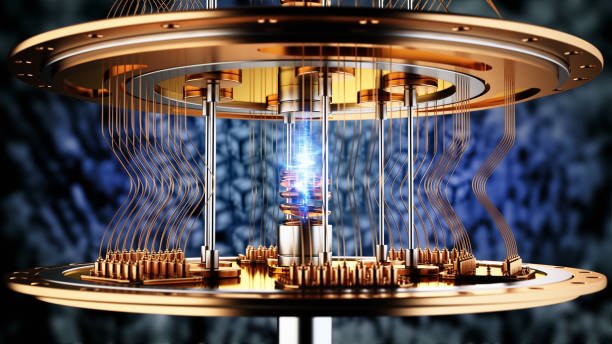

The Local Thinking Chip:

The most effective method of ensuring data privacy is our local AI thinking chip as discussed earlier.

On-Device Processing: The developer simply installs the AI brain onto your phone or computer. As you request the AI to compose a message using your personal messages, the personal messages do not leave your device. The thought process occurs there.

Faster and Safer: Not only is this safer to your secrets, but it is also faster. The internet waits no one.

Builders should therefore attempt to use local AI chips to any option which involves highly personal or secret data.

2. Teach the AI with Fake Data

The brain that is the AI must be trained. It studies through examining millions of cases. Supposing that a company trains its AI using the real customer data, it is nearly impossible to make the AI forget about these secrets and repeat the same secrets in the future.

The Solution: Synthetic Data

Synthetic data can be used to train the AI by builders with fake data.

Making of Fakes: They generate millions of fakes using customer names, addresses, and business report fakes with the help of a computer. Such fraudulent data appears as true to the AI, but it does not have any secrets.

Cleaning the Real Data: If they must use real data, they must clean it first. They take out all the names, dates, and places that could identify a person. They change the numbers and mix up the words so the data is no longer a secret. This is called anonymization.

Consequently, the AI learns the rules of writing or drawing without learning any of the user’s actual secrets.

3. Build a Wall Between the User and the Training

When you use a generative AI tool, you are giving it a prompt, or a request. That prompt is your data. Builders must make sure that the prompts you give the AI are not used to train the AI later.

The Two-Way Wall:

No Training by Default: The company must promise that the words you type into the app will not be saved and used to make the AI smarter. The user must have a choice to opt-in if they want to share their data.

The Safety Filter: The builders must put a safety filter on the AI. This filter stops the AI from saying or showing things that are harmful or illegal. It also stops the AI from repeating private information it might have learned by mistake.

Furthermore, the builders must be honest with the users. They must write a very clear policy that explains what data they keep, how long they keep it, and what they use it for. If the policy is confusing, people will not trust the tool.

The Final Responsibility

Generative AI is a powerful tool that can do amazing things. But with great power comes great responsibility. Builders must remember that the user’s trust is more valuable than any new feature.

By keeping the thinking local, training the AI with fake data, and building a strong wall between the user and the training data, builders can make sure their new tools are both smart and safe.